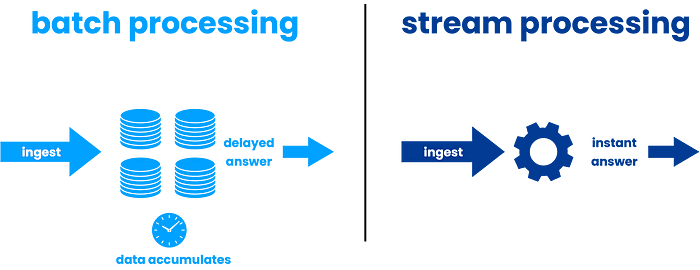

Stream processing is great for handling data as it comes in. It’s perfect for quick analytics and fast responses. Batch processing, however, deals with big data sets at set times. It’s best for managing lots of data and tasks that don’t need to be done right away.

Introduction to Data Processing Techniques

Data processing is key to turning raw data into useful insights. It’s vital for making smart decisions and planning in many fields. It uses methods like real-time and batch data processing to keep data up-to-date and correct.

What is Data Processing?

Data processing changes raw data into useful info through several steps. These steps are:

- Collection: Getting raw data from different places.

- Storage: Keeping the data in databases or data warehouses.

- Organization: Sorting and organizing data for easy access.

- Analysis: Using techniques to understand and gain insights from the data.

Real-time and batch data processing have their own ways and benefits. Real-time processing works with data as it comes in. Batch processing handles data at set times.

Why Data Processing is Important

Good data processing is key for companies for many reasons:

- Informed Decision-Making: Helps businesses make smart choices, lowering risks and improving results.

- Trend Identification: Finds trends and patterns for innovation and staying ahead.

- Operational Efficiency: Makes processes smoother and boosts productivity with accurate data.

Bad data processing can cause wrong info, bad plans, and money losses. But, good data management leads to better performance and growth.

Knowing and using real-time and batch data processing well is key to using data fully.

| Data Processing Method | Characteristics | Use Cases |

|---|---|---|

| Real-Time Data Processing | Processes data as it is generated | Live tracking, instant analytics |

| Batch Data Processing | Processes data at scheduled intervals | Data warehousing, periodic reporting |

What is Stream Processing?

Stream processing is about handling data as it comes in, in real-time. It’s key for getting quick insights and making fast decisions with the latest data.

Overview of Stream Processing

Stream processing means analyzing data as it happens. It’s different from batch processing, which deals with big chunks of data at set times. Tools like Apache Kafka and MQTT help manage big data flows smoothly.

How Stream Processing Works

Stream processing works in a cycle of taking in, processing, and sending out data. It grabs data from sources like sensors and social media. Then, it processes this data right away to find important insights and act fast.

Common Use Cases

Stream processing is used in many ways:

- Financial Transactions: It tracks stock trades and catches fraud in real-time.

- Social Media Feeds: It makes social media better by giving users quick updates and tips.

- IoT Sensor Data: It watches and uses data from smart devices for smart homes and businesses.

Adding stream processing to your data plan makes your systems better at handling data all the time. It keeps them quick and ready for the fast-paced digital world.

What is Batch Processing?

Batch processing is a key way to handle big data in computing. It’s different from stream processing, which deals with data as it comes in. Batch processing works with big chunks of data at set times. It’s great for complex tasks on big datasets where speed isn’t key.

Overview of Batch Processing

Batch processing is unique because it’s structured and happens at set times. Companies collect data for a while before processing it all at once. This method is perfect for tasks like processing transactions at the end of the day or making reports.

How Batch Processing Works

The steps in batch processing are:

- Data Collection: Data is gathered from different places and stored.

- Data Validation: The data is checked to make sure it’s right and complete.

- Data Processing: Jobs are run in batches using tools like Apache Hadoop. These jobs can sort, filter, and sum the data.

- Output Generation: The processed data is then saved or given out in formats like reports or analytics.

Batch processing uses strong tools like Hadoop because they can handle lots of data well. This makes batch processing a big part of how data is processed.

Stream Processing vs Batch Processing

In today’s fast-paced data world, picking between stream and batch processing is key. Each has its own strengths for different needs, impacting how well and fast data is handled.

Stream processing is great for instant data analysis. It’s perfect for live dashboards, fraud checks, and quick recommendations. It helps spot trends and problems right away, making responses quicker.

Batch processing, however, is better for big data jobs done at set times. It’s used for data storage, long-term studies, and reports. It’s efficient for big data, saving costs and resources.

Here’s a quick look at the main differences:

| Aspect | Stream Processing | Batch Processing |

|---|---|---|

| Data Processing Speed | Real-time | Scheduled intervals |

| Latency | Low | Higher |

| Throughput | Lower | Higher |

| Use Cases | Live analytics, monitoring, fraud detection | Data warehousing, periodic reporting, long-term analysis |

| Scalability | High | Moderate |

| Cost Efficiency | Depends on use case | Generally better |

To wrap it up, knowing the pros of stream and batch processing helps businesses pick the right one. Whether it’s for quick insights or slower analysis, the choice matters.

Key Differences Between Stream and Batch Processing

It’s important to know the differences between stream and batch processing. Each has its own strengths and weaknesses. You need to think about what your data needs are.

Data Processing Speed

Stream processing is fast, handling data as it comes in. This is great for real-time needs. Batch processing, however, works in batches and takes longer but is more thorough.

Latency and Throughput

Latency and throughput are important. Latency is how long it takes to get results. Stream processing is quick, perfect for fast insights. Batch processing takes longer but is good for big jobs. Throughput is how fast data is processed, and it depends on how you set it up.

Cost Efficiency

The cost of processing data can differ a lot. Stream processing is cheaper for ongoing data streams. Batch processing is better for big, scheduled data jobs. It uses less resources over time.

| Criteria | Stream Processing | Batch Processing |

|---|---|---|

| Speed | Near-Instantaneous | Interval-Based |

| Latency | Low | High |

| Throughput | Variable | Variable |

| Cost Efficiency | High for constant data | High for large datasets |

When to Use Stream Processing

Stream processing is key in data handling. It beats traditional batch methods in many ways. We’ll look at when stream processing shines, especially in real-time and continuous updates.

Real-Time Analytics

Stream processing is great for real-time analytics. For example, in fraud detection, quick analysis stops scams fast. It’s also good for tracking user behavior, helping companies act fast on user feedback.

Continuous Data Updates

It also keeps data fresh and up-to-date. This is vital in fast-changing environments. It keeps information accurate and relevant.

Monitoring and Alerting Systems

Stream processing is also good for monitoring systems. It checks for security and performance issues as they happen. This leads to quick alerts and fixes, keeping systems safe and running well.

Here’s a quick look at where stream processing really shines:

| Use Case | Advantages |

|---|---|

| Real-Time Analytics | Immediate data analysis for fraud detection and user behavior |

| Continuous Data Updates | Ongoing updates for accurate and relevant data |

| Monitoring and Alerting Systems | Proactive issue detection and instant alerts |

When to Use Batch Processing

Data Warehousing

Batch processing is great for data warehousing. It gathers data from many sources into one place. This helps businesses make smart choices with lots of data.

Data Backup and Recovery

Batch processing is also top-notch for backups. It runs automatically, making sure data is safe. This keeps data safe and makes it easy to get back if lost.

Enterprise Reporting

Batch processing is also good for reports. It handles big data well, making detailed reports. These reports help leaders make big decisions.

| Use Case | Description | Advantages |

|---|---|---|

| Data Warehousing | Consolidates data from various sources into a central repository for business intelligence. | Facilitates informed decision-making. |

| Data Backup and Recovery | Performing systematic backups and ensuring effective recovery plans. | Maintains data integrity and quick recovery. |

| Enterprise Reporting | Generating complex reports by aggregating vast amounts of historical data. | Supports strategic planning and decision-making. |

Conclusion

Understanding stream processing vs batch processing is key in data management. Stream processing is great for real-time analytics and updates. Batch processing is better for data warehousing and reports.

Choosing between stream and batch processing depends on your needs. Speed, latency, and cost are important. By considering these, you can choose the best approach for your business.

Knowing both stream and batch processing helps you make better decisions. It lets you use your data to drive your business forward. This way, your data becomes a valuable asset.

Explore more topics

Talk to Our Expert

Our journey starts with a 30-min discovery call to explore your project challenges, technical needs and team diversity.