Why Choose Upstaff’s AI Prompt Engineers?

Table of Contents

AI prompt engineers leverage LLMs to automate software development. We vet engineers to help you find talent with expertise in NLP, Python, AI frameworks, and more that can be part of your team. Here’s what sets us apart:

AI prompt engineers leverage LLMs to automate software development. We vet engineers to help you find talent with expertise in NLP, Python, AI frameworks, and more that can be part of your team. Here’s what sets us apart:- Fast matchmaking: Receive vetted AI prompt engineer profiles in 1-3 days, 50+ hours faster than traditional recruitment.

- Targeted skills: Proficiency in Python, LangChain, DSPy, LLMs with demonstrated expertise validated through AI and human screening, code reviews.

- Fit for your specific tasks: Write prompts that target very specific outcomes (generate RESTful APIs, unit tests, lint/format code, etc).

- Flexibility: Hire to tackle short-term tasks (generate code during a sprint) or for long-term projects (creating AI-powered microservices) or hire full-time with clear pricing, no termination fees.

- Delivering Results: Backed by successful 100+ projects, increasing development speed and efficiency for startups, SMBs, and enterprises across sectors such as fintech, e-commerce, and healthcare.

- Fast matchmaking: Receive vetted AI prompt engineer profiles in 1-3 days, 50+ hours faster than traditional recruitment.

- Targeted skills: Proficiency in Python, LangChain, DSPy, LLMs with demonstrated expertise validated through AI and human screening, code reviews.

- Fit for your specific tasks: Write prompts that target very specific outcomes (generate RESTful APIs, unit tests, lint/format code, etc).

- Flexibility: Hire to tackle short-term tasks (generate code during a sprint) or for long-term projects (creating AI-powered microservices) or hire full-time with clear pricing, no termination fees.

- Delivering Results: Backed by successful 100+ projects, increasing development speed and efficiency for startups, SMBs, and enterprises across sectors such as fintech, e-commerce, and healthcare.

AI Prompt Engineers: The Secret Sauce Behind ChatGPT and Code

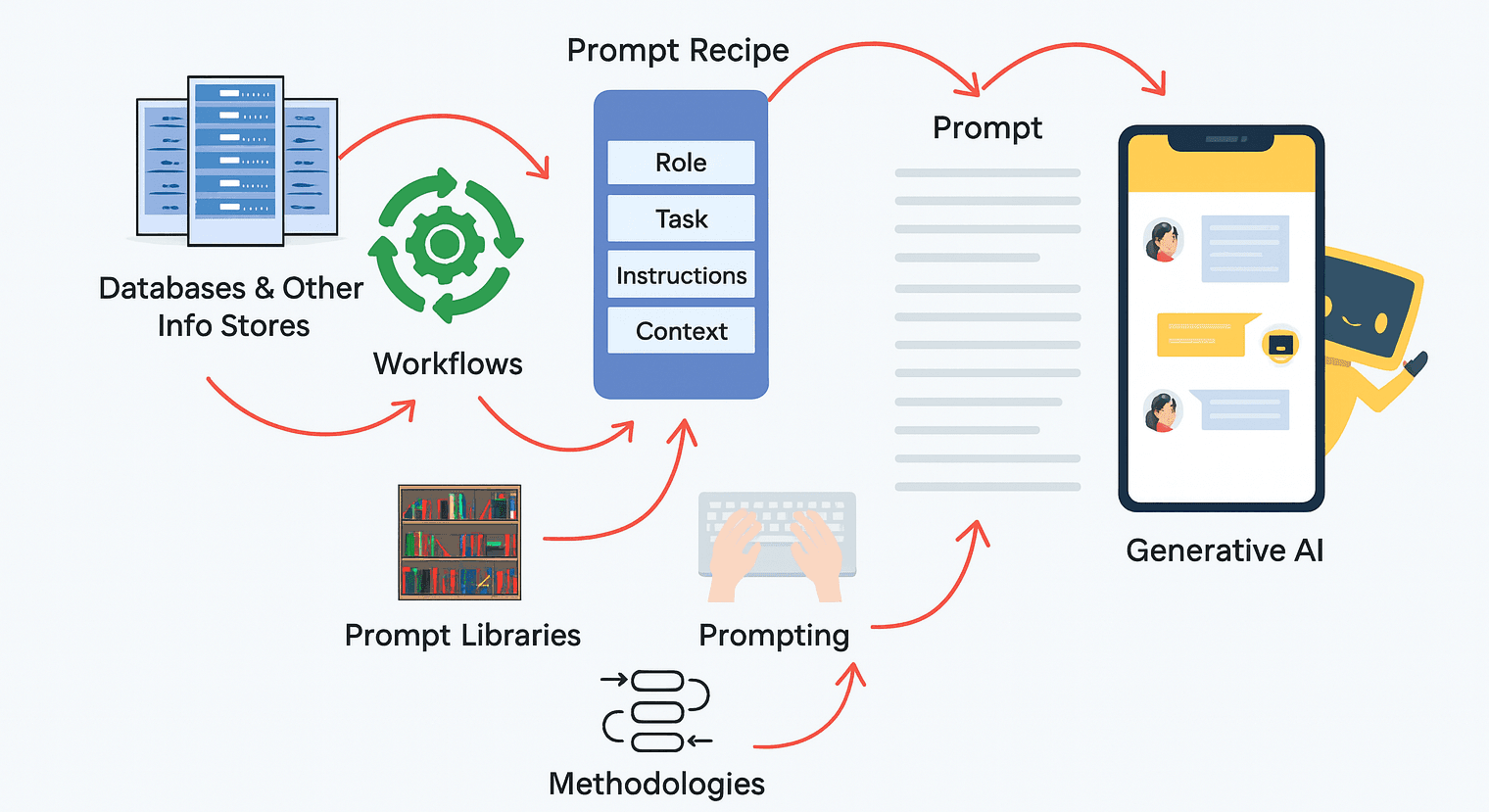

AI prompt engineers are the magicians behind the curtain who use a killer set of tools and techniques to get large language models (LLMs) to generate production-ready code, tests, API docs, and more for real-world software projects. They bring a unique blend of technical and practical problem-solving skills to the software development process to keep it running smoothly.Popular Tools and Frameworks

- Python and Libraries: Python remains the go-to language for writing prompts, integrating LLMs into existing workflows, and automating common tasks. Libraries like

transformers(Hugging Face),pytorch(AI models), andnumpy(data wrangling) are their bread and butter. - LangChain: This Python framework helps chain together multiple prompts, maintain chat history, integrate with external tools like the GitHub API or SQL databases, and build reusable LLM workflows.

- DSPy: A Python library that automates the trial-and-error of prompt tweaking, by using metrics like BLEU or ROUGE scores to automatically optimize the model’s output for specific tasks like code generation or test generation.

- TextGRAD: A framework that’s particularly good for cases where you need to optimize a prompt over time using feedback (like complex tasks such as generating database migrations).

- AutoGen: A Python library that lets you integrate multiple LLMs that can work together to accomplish a task (e.g., one model generates code, and another verifies it).

- LlamaIndex: A Python library for indexing your organization’s data (code repositories, Confluence pages, etc.) to allow LLMs to generate API docs or other outputs on-demand in a few seconds.

- FastAPI or Flask: Used to build production-ready, lightweight, and async-capable APIs that serve up LLM-generated output.

- Docker: Containers are used for versioning and making LLM workflows more robust and consistent across development, staging, and production.

- Jupyter Notebooks: To experiment with prompts, modify LLM-generated output, and visualize data.

- Postman: Used to test APIs that serve up LLM-generated output to make sure it integrates smoothly with existing systems.

- Git: Used to version control prompt templates, generated code, test suites, and other artifacts in shared repos so the team can collaborate.

Key LLMs

- GPT-4 (OpenAI): The go-to model for code writing, debugging, code completion, and generating structured outputs like JSON or YAML configuration files. JSON mode is particularly useful for consistent and predictable responses.

- Claude 3.5 Sonnet (Anthropic): Used for tasks like writing clearer documentation, providing safer code fixes and improvements, and going line-by-line with debugging.

- LLaMA 3 (Meta AI): A newer open-source model, often run on in-house servers, tuned for tasks like code generation, data processing, and research.

- Gemini 1.5 (Google): Good for more research-heavy tasks like algorithm and performance optimizations, and regex pattern generation.

- Mistral 7B: A smaller, open-source model that’s more resource-efficient and has been found to be faster than the competition for coding and NLP tasks.

- CodeLlama: A code-generation version of LLaMA that’s been fine-tuned to understand syntax better across different programming languages.

Useful Prompt Engineering Techniques

- Chain-of-Thought (CoT): Can be used for any task that benefits from breaking down a problem into a series of steps (e.g., “Write a radix sort algorithm in Go and explain each step of the algorithm”).

- Tree-of-Thought (ToT): Good for exploring different approaches to solving more complex problems (e.g., optimizing a NoSQL database query or brainstorming endpoints and schemas for a new REST API).

- Meta Prompting: For making the LLM improve its own prompts, which is a powerful way to automate and streamline many kinds of tasks (e.g., generate Swagger docs for a REST API or generate a database schema based on table descriptions).

- Few-Shot Prompting: Involves providing the LLM with a few examples of a task (e.g., “Write two pytest test cases that test an endpoint. Now, write a third pytest test case that tests this endpoint: …”).

- Zero-Shot Prompting: The model is relied on to generate the correct output in its first attempt for tasks such as code linting, or converting Python to TypeScript.

- Self-Consistency: Running the same prompt multiple times and selecting the best output. Useful for use-cases such as code refactoring that have multiple correct answers.

- Prompt Caching: Saves and reuses commonly used prompts in frameworks like LangChain, to improve performance and consistency (instead of redefining every prompt from scratch).

Core Software Engineering Principles Prompt Engineers Use

Prompt engineers aren’t “playing around” with LLMs, they bring software development expertise to the table that helps the team produce better software and production-ready artifacts.- Modularity: Prompt templates can be modularized and reused like functions (e.g., a prompt to generate CRUD endpoints for a microservice).

- Abstraction: The model’s inner workings are abstracted away from the developers, providing them with clean code, test suites, or other artifacts.

- Scalability: Prompt engineers use existing tools like LangChain or AutoGen to build scalable prompt pipelines and workflows that can handle large codebases or multi-step tasks.

- Maintainability: Code that generates prompts and stores prompts and output in Git with clear comments helps teams collaborate more effectively.

- Reliability: CoT and self-consistency techniques can be used to reduce hallucinations (LLM errors) and increase the reliability of use cases for production code.

- Testability: Test cases generated with the code help ensure testability of the generated code.

Prompt Engineering’s Role in Development

Prompt engineers and their workflows are a major productivity boost for development, taking over manual, mundane, or repetitive tasks and optimizing or automating code for quality and consistency. According to Gartner, by 2026, 80% of developers will use generative AI (such as prompt engineering) for at least 10% of their work.- Code Faster: Automated code generation of boilerplate, like API controllers or DTOs, can reduce development time by up to 40%.

- Find Bugs Earlier: Prompt workflows can help catch potential logic or pipeline errors earlier using CoT prompts.

- Increase DevOps Efficiency: Workflow automation for common DevOps tasks, from CI/CD pipelines, test generation, changelogs, and more can save time.

- Empower Non-Developers: Product managers, designers, testers, and others can also create test cases, technical specs, and more using well-designed prompts.

Hire an AI Prompt Engineer with Upstaff

- Step 1: Fill out our form and tell us what you’re looking for in a prompt engineer (focus on code generation? Specific LLMs or tools/frameworks like LangChain or Claude?).

- Step 2: We’ll provide you with vetted profiles in 1-3 days that match the project’s requirements and tech stack.

- Step 3: Meet the candidates online and ask them questions to evaluate how they think through prompt design, LLM integration and prompt libraries/frameworks, and relevant coding challenges.

- Step 4: Hire prompt engineers and continue to add to your DevOps and AI teams as your project scales.

Why Upstaff is the Best Place to Find AI Prompt Engineers

Over 100 AI and software development projects delivered since 2019, with a 4.9/5 client rating on Upstaff. Our prompt engineers have extensive hands-on experience with Python, NLP, LLMs, and solving real-world software development challenges.- Real Experience: Our prompt engineers are experienced with Python, LangChain, and DSPy, and major LLMs and platforms such as GPT-4, Claude, LangChain, and LlamaIndex.

- Easy to Hire: We make the hiring process simple: matching, interviewing, and onboarding with a focus on your project’s goals and requirements.

- Global Talent: Hire engineers from anywhere in the world with experience in a wide range of industries and domains from fintech to e-commerce.

Hire an AI Prompt Engineer Today

Need to generate code faster, have better tests, or automate your CI/CD pipelines? Upstaff’s AI prompt engineers can help do just that in 24-48 hours. Visit Upstaff.com or contact us to hire developers who understand AI’s power for solving software engineering problems.

Table of Contents

Talk to Our Expert

Our journey starts with a 30-min discovery call to explore your project challenges, technical needs and team diversity.

Yaroslav Kuntsevych

co-CEO